Commentaries, like any books, can be good or bad though it can be hard to articulate why we feel some commentaries are better than others. I think that’s because we all assume that commentaries should be doing something, but we aren’t sure exactly what that something is.

In general, I think some people would agree that commentaries attempt five general tasks: (1) give the reader a better historical understanding of the world in which the text was produced and (2) in which the first audiences resided; (3) interact substantially with the original text; (4) give some attention to what the text might mean to modern readers (e.g., through reception history or theological reflection); (5) add something new either through new evidence or new methods. No matter the type of commentary–whether “devotional” or “academic”–all commentaries attempt these tasks.

Along that same line, we can all agree that commentaries should not be good or bad based on who uses them. Merit, and not popularity, should be the definitive criteria used when choosing commentaries. For example, how successfully does the commentary perform each of the 5 tasks above? Does it simply quote other scholars and fails to add something new (5)? Does it do well with the original languages (3) but fail with historical information (1 & 2)?

This is not the approach of BestCommentaries.com.

As someone with even a cursory interest in researching the Bible, you’ve probably come across John Dyer’s website BestCommentaries.com (BC). BC attempts to list and rank commentaries on the Bible in order “to help students at all levels to make good, informed decisions about which commentaries they should purchase.” Dyer knows that “scores and ratings alone” can’t tell the whole story, but he hopes “the combined resources available through this site points [students or pastors] in the right direction.”

In reality, BC points male students/pastors in the conservative, reformed, white evangelical American direction.

A few caveats. BC’s current state is not entirely Dyer’s fault since ratings are crowd-sourced, and it may not be what he originally envisioned. It also should be noted that there is some value in doing exactly what BC does now for various reasons, purposes, and audiences. Dyer’s website is also as far as I can tell the best database of somewhat current and upcoming English commentaries. The labels technical, pastoral, etc are also generally helpful. BC also displays the types of commentaries conservative Americans tend to prioritize, and it is certainly helpful when looking for a comprehensive list of commentaries on a particular book even if the rating system is flawed.

So what’s wrong with BC?

1. The methodology is flawed.

The methodology is straight-forward. The average rating from users, journals, and featured reviewers is compiled (x). The amount of reviews (y) is compiled with more weight given to a reviewer who gives ratings for more works. In other words, if someone reviews 30 books, their reviews count more than someone who reviews one book. Finally, users can create a library on the site and include whatever books they own or desire. The amount of times a given commentary appears in users’ libraries (z) is added to the ratings. So, a commentary’s score = x + (y/10) + (z/10). Carson’s John commentary has the highest score (14.91) which is converted to 100. Other commentaries are averaged down from that.

We are told the methodology weights academic reviews of works that many people may not own, but it is not clear how this works or what sources are considered academic. For example, is RBL considered more academic than Carson’s list? Is John Piper more academic than Jeremy Pierce (featured reviewer, PhD, Syracuse)? Is Chailles more academic than Carson, and how is that determined?

Since this methodology relies on the popularity of certain commentaries, the scores actually show us which commentaries select reviewers use. The select reviews, as shown below, are a very specific subsection of Christianity. The surveyed subjects are largely white males who are reformed, conservative, American, Protestant, and evangelical.

Best commentaries isn’t showing readers which commentary is best. Rather, it shows which commentaries are popular among white American evangelical men with a large bent towards Reformed traditions.

One might object that other viewpoints just aren’t as readily available as the white American male, etc. perspective. But even a cursory Google search shows that this isn’t true. One could integrate the egalitarian Christians for Biblical Equality’s list, Catholic recommendations from Scott Hahn, or mainline recommendations from Princeton Seminary or Yale Divinity. Reviews from British journals like JSNT would give a non-North American opinion. RBL reviews are already linked but are integrated poorly (see below).

But to prove my thesis, I need to demonstrate the hegemony. Looking at who these featured reviewers are, when they reviewed these commentaries, and for whom they reviewed them should shed some light on this aspect.

2. Featured reviewers are homogeneous and wrote years ago for specific audiences.

First, nearly all reviews are given 5 stars. I assume Dyer’s reasoning is that only X number of commentaries are recommended, therefore they should be counted as a 5 star rating. In reality, this actually gives a ton of weight to the featured reviews. Not only is the commentary given extra weight because it is reviewed at all, but it is given 5 stars which bumps it to the top of the rankings.

Second, nearly all featured reviewers are white reformed evangelical American men who are recommending commentaries for (reformed evangelical) pastors. Though some cursory attention is given to non-American commentaries, the vast majority recommend the same commentaries but, and this is key, not because those commentaries are the best, but because those commentaries are what they (as American evangelicals etc) use.

I went through all of the featured reviewers on the BC website, gathered their biographies, and listed them below. If the recommendations are pulled from a book or blog, I list the publication dates.

| Reviewer | Affiliation | Audience |

| D.A. Carson (2007) | TEDS, TGC | Theological students, ministers |

| Derek Thomas (2006) | RTS, ARC/PCA, Ligonier | Ministers |

| Matt Perman (2006) | Desiring God, Southern (MDiv) | Ministers/students |

| Fred Sanders | Biola, ETS | N/A |

| Kostenberger/Patterson (2011) | SEBTS/Liberty | Serious students of Scripture |

| Jim Rosscup (2003) | Master’s Seminary | Pastors (see note) |

| Joel Green (2015) | Fuller (UMC) | Students, pastors |

| Joel Beeke | Puritan Reformed TS | N/A |

| John Dyer | DTS | Students, pastors |

| John Glynn (2007) | ETS | Students and pastors |

| Keith Mathison (~2008) | Ligonier, Reformation BC. | Pastors, students. |

| Philip J Long (2012) | Grace Bible College | Blog audience |

| Robert Bowman Jr | Apologetics, ex-NAMB | N/A |

| Scot McKnight | Northern Seminary | Blog audience |

| Tim Challies (2013) | Pastor, blogger, TGC | Blog Audience |

| Tremper Longman III (2007) | Westmont, Fuller, ETS | Pastors, students (see note) |

We can see a few details from this table. First, these featured reviewers seem to be weighted the same, meaning there is no difference between bloggers and academics who are in a better position to gauge whether the commentary is successful or not. Second, the majority of these reviews are over ten years old. This means reviews cannot consider newer commentaries which, in turn, gives the newer commentaries a lower score simply for being new. Third, all reviewers but one are an American evangelical men. Many are also reformed and most (if not all) are white.

Example of ranking methodology

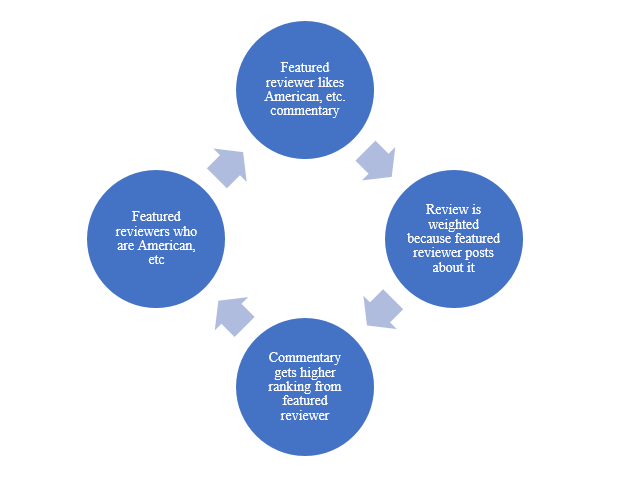

The algorithm displays a type of circular reasoning as seen in the graph above. The (American, evangelical, etc) reviewers commend commentaries that are popular in their own circles or commentaries that fit best within their audience’s use (e.g., Reformed churches) which, because they are recommended at all, ranks them highly on BC. Therefore, the best commentaries are the most popular commentaries that select reviewers use for their own purposes (preaching, etc). Because most of the recommendations are for Reformed, evangelical pastors, the commentaries are approved for that audience. Some reviewers like Rosscup specifically commends commentaries that he deems “show belief in the reliability of biblical statements” which means he recommends conservative commentaries over others regardless of how successful the commentary actually is.

Best Commentaries is less a ranking of “best commentaries” and more a ranking of commentaries that white American evangelicals use.

To show this methodology at work, let’s look at the commentaries on John as an example. Köstenberger’s John commentary which relies far too heavily on Carson’s John commentary is rated second overall which, though it did not receive great reviews outside evangelical circles, is expected. At the very bottom of the rankings, we find Bultmann who wrote the most influential commentary ever written on John. Of course, we shouldn’t expect Bultmann’s commentary to be “the best” commentary on John since it is outdated and uses a questionable methodology, but it changed the face of Johannine studies and surely deserves a higher ranking than dead last!

As an example of American-centric ratings, we find Gary Burge’s NIV Application Commentary is ranked over Andrew Lincoln’s commentary. William Hendricksen’s John commentary is very highly ranked despite being sorely outdated. Richard Burridge’s little commentary (Daily Bible) is not listed at all.

Again, BC is not measuring the commentaries’ merits or success of accomplishing the 5 jobs listed at the beginning. BC is measuring the popularity of commentaries within specific theological, geographical, and racial circles.

3. BC prioritizes non-specialist reviewers for specialist works.

Anyone can register and write a short review. BC’s own users may or may not have any credentials or give any real reason for their ratings. Looking at Craddock’s Luke commentary (which I highly recommend) we find two reviews: one positive and one negative. Because only two people rate Craddock, it falls to the bottom of the ranked ratings. In contrast, James R. Edwards’s Luke commentary is given 5 stars from a BC reviewer who says only “A great addition to the Pillar series. A fine and well written commentary on the Gospel of Luke.” Unless one is a Lukan scholar or owns the book, you’d never know that Edwards’ commentary assumes Luke uses a Hebrew source as his major source (pp.15-16) which is highly controversial.

The way the rating metrics work is that any review by a BC user is given some weight. Again, this quickly becomes a race to pick your favorite commentaries with or without any real reasons aside from “X recommended it.”

This is especially the case with works that featured reviewers or journals do not review. Take, for example, Kenneth Gangel’s John commentary (Holman). It appears in 4 users’ libraries and is given 5 stars from RBL even though the RBL review states clearly that it is not recommended: “Let us be absolutely clear at the outset by saying that this book will be of absolutely no use to the serious scholar of John—and be of only slightly more use to the lay reader seeking encouragement in her or his faith… We can in no terms recommend this volume to the reader. “

But BC’s methodology gives a 5 star review to the commentary because it was reviewed at all. More importantly, because it is in some users’ libraries, we find that Gangel’s commentary is ranked above, for example, Andrew Lincoln’s commentary which was reviewed highly (but critically) by Craig Keener.

Similarly, the RBL review for Bock’s Luke commentary concludes: “No one can complain that this commentary does other than what it set out to do. And although it will be appreciated by its intended, evangelical audience, even these readers will have to look elsewhere to get the full story.” Hardly a 5 star review!

The weight of users’ libraries seems to outweigh these critical reviews. These critical reviews are indiscriminately rated as 5 stars regardless of the content of the actual review. We are left with a ranking that prioritizes popularity rather than merit. And only popularity within the circles detailed above.

4. BC prioritizes white American, evangelical works

Because the weighted reviewers are American evangelicals, they recommend largely the American evangelical commentaries. This is neither bad nor good, but we should be open and honest about the rating system.

Let’s look at Luke again. Chuck Swindoll and John MacArthur are listed as higher than Judith Lieu, F. Scott Spencer, Justo González, Graham Twelftree, and Norvall Geldenhuys. In no measurable way are Swindoll and MacArthur’s commentaries “better” than those works. Michael Wolter isn’t even listed though his commentary has received rave reviews (now in English since last year). In reality, Swindoll and MacArthur are simply more popular within the American conservative traditions.

Again, we can see that BC’s algorithm calculates ranking based on popularity rather than merit while also prioritizing American evangelicals who may or may not be specialists.

Conclusion

Commentaries should be ranked on their merits and contributions rather than their popularity. BC ranks commentaries based on their popularity within American evangelicalism, particularly conservative Reformed circles and, as such, does not shed any light on the “best” commentaries.

The website BC succeeds in ranking commentaries, but only in so far as they are ranked in popularity according to American evangelicals. As long as one realizes the biases within the website, BC has some benefit. Dyer’s website succeeds in hosting a website with aggregated reviews of varying helpfulness. Being able to sort by year is also very helpful.

In reality, Biblical scholars desperately need a new website which only pulls reviews from journals like RBL, JSNT, etc and academic bloggers who are in some position to recommend commentaries based on what contributions they make to scholarship. Ideally, each entry should read as an annotated bibliography, containing 1-3 important points one should know about the commentary. To help fill the giant lacuna currently represented on BC, representation from minority groups, women, and mainline denominations would be especially welcome.

The question remains: should you use it? If you want to know the popular commentaries within the above circles, yes. If you want to see a reasonably up-to-date selection of works on a book/topic, then yes.

Should you consult it if you want to know the “best” commentaries based on their merit and contributions? Absolutely not.

Thanks to the members of the Facebook group Nerdy Theology Majors for their valuable feedback.

5 Comments

Leave your reply.